Big Objects to manage Salesforce data storage

Every Salesforce edition includes limited data storage, and as your org goes live and becomes an active hub, the data created in the org starts accumulating. This is, of course, what you want to begin drawing value from your Salesforce implementation. However, this also means that you need to start monitoring your org's data storage usage to ensure it is not nearing or exceeding the allocated data storage.

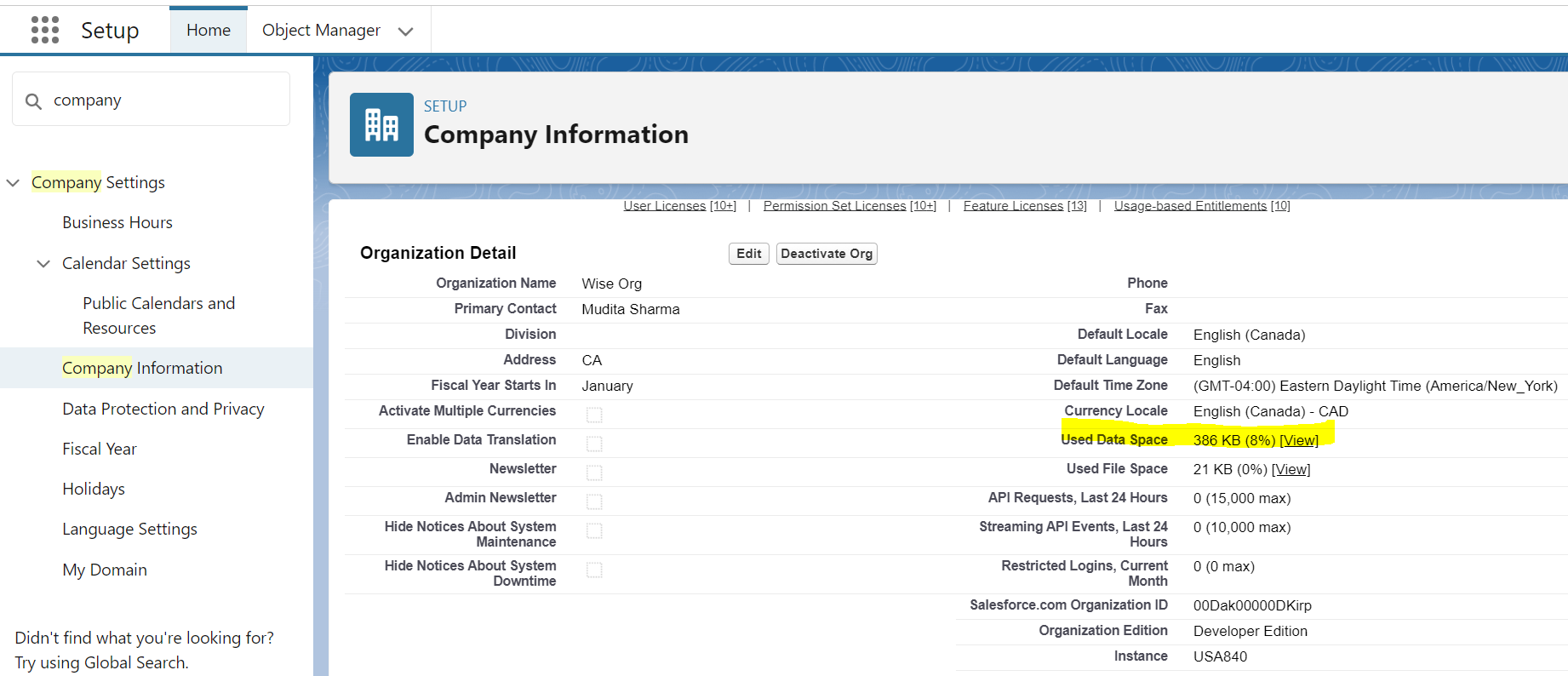

You can find your org's storage usage and limits by navigating to Setup > Company Information, and then clicking the View link next to Used Data Space.

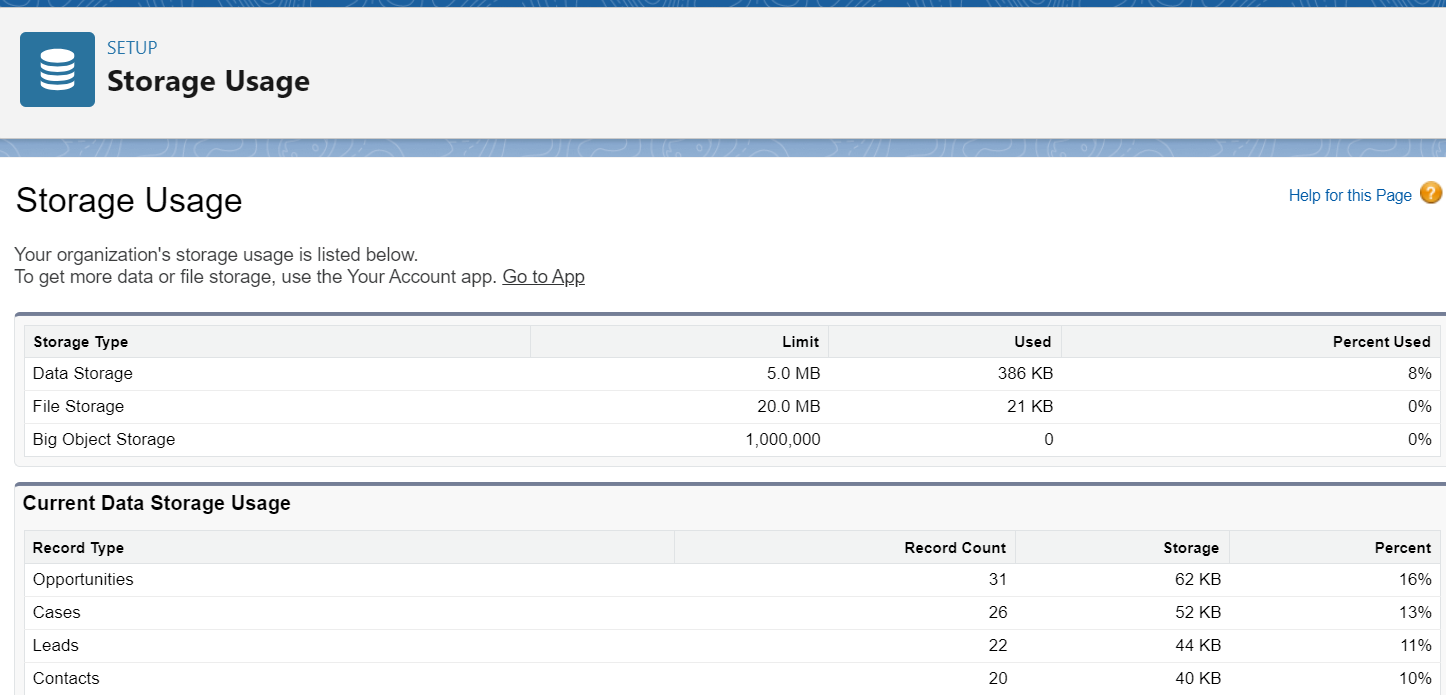

This takes you to the Storage Usage page with limits and usage info. Your current storage usage broken down by record types is also found here.

If your overall data storage usage is above 50%, you should review the record types that are accumulating large record counts and contributing most towards this storage.

Best practice is to have an archival strategy and implementation in place to routinely archive data in external storage. Additionally, if there is data that needs to remain in your active Salesforce org but is contributing significantly to your data usage, you can leverage Salesforce Big Objects to manage your data. Migrate the sObject records to a new custom Big Object and then delete the data from sObject to free up storage.

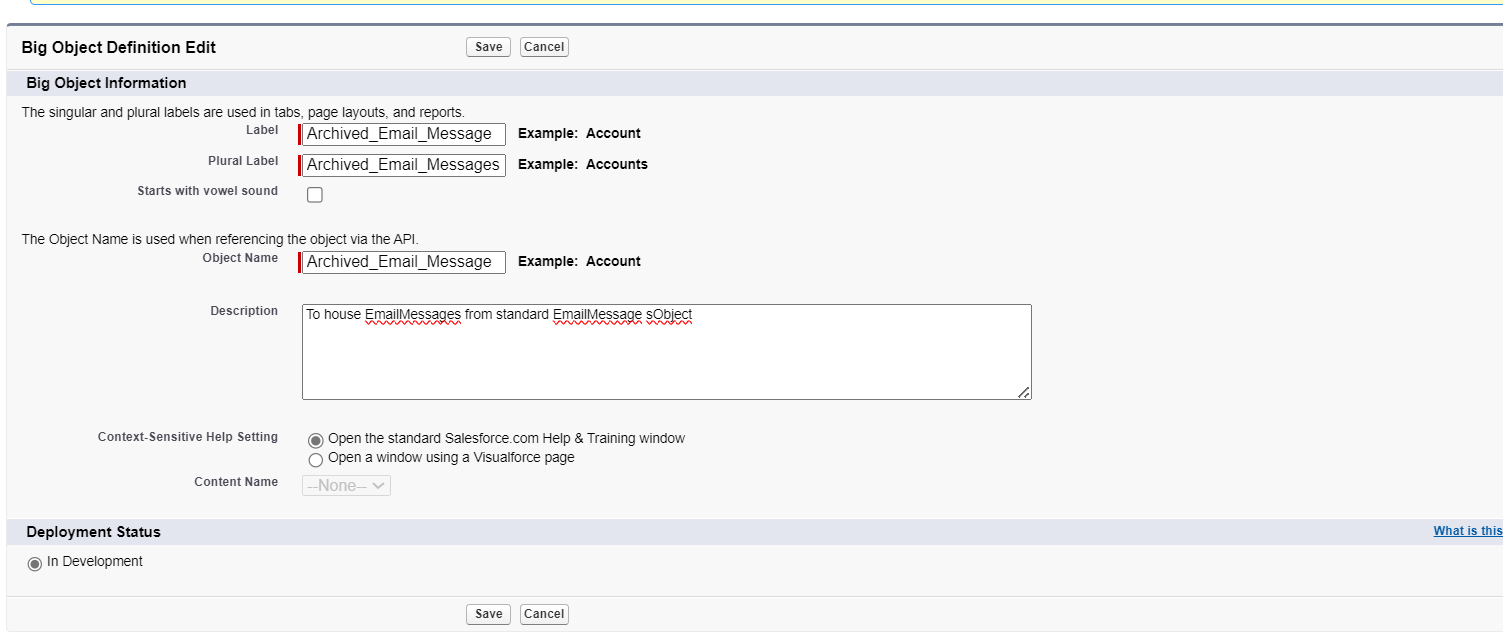

For example, if the Email Message record types are occupying a large amount of storage, create a custom Big Object to house EmailMessage records. Migrate EmailMessage records to the custom Big Object and then delete the EmailMessage records from the sObject to free up data space.

In Salesforce, Big Objects are used to store large amounts of data. They are not the same as regular Salesforce objects and have some specific considerations and methods for inserting data. Inserting records into a Big Object can be done using CSV files with the Salesforce Bulk API, custom Apex code, or the Big Object Data Manipulation Language (DML).

Here's a step-by-step guide to create a custom Big Object to house EmailMessage records using the Salesforce Bulk API:

- Define the schema for the custom Big Object.

- Create the Big Object in Salesforce.

- Export the EmailMessage records to a CSV file.

- Use the Salesforce Bulk API to insert the records into the custom Big Object.

- Delete the original EmailMessage records to free up storage space.

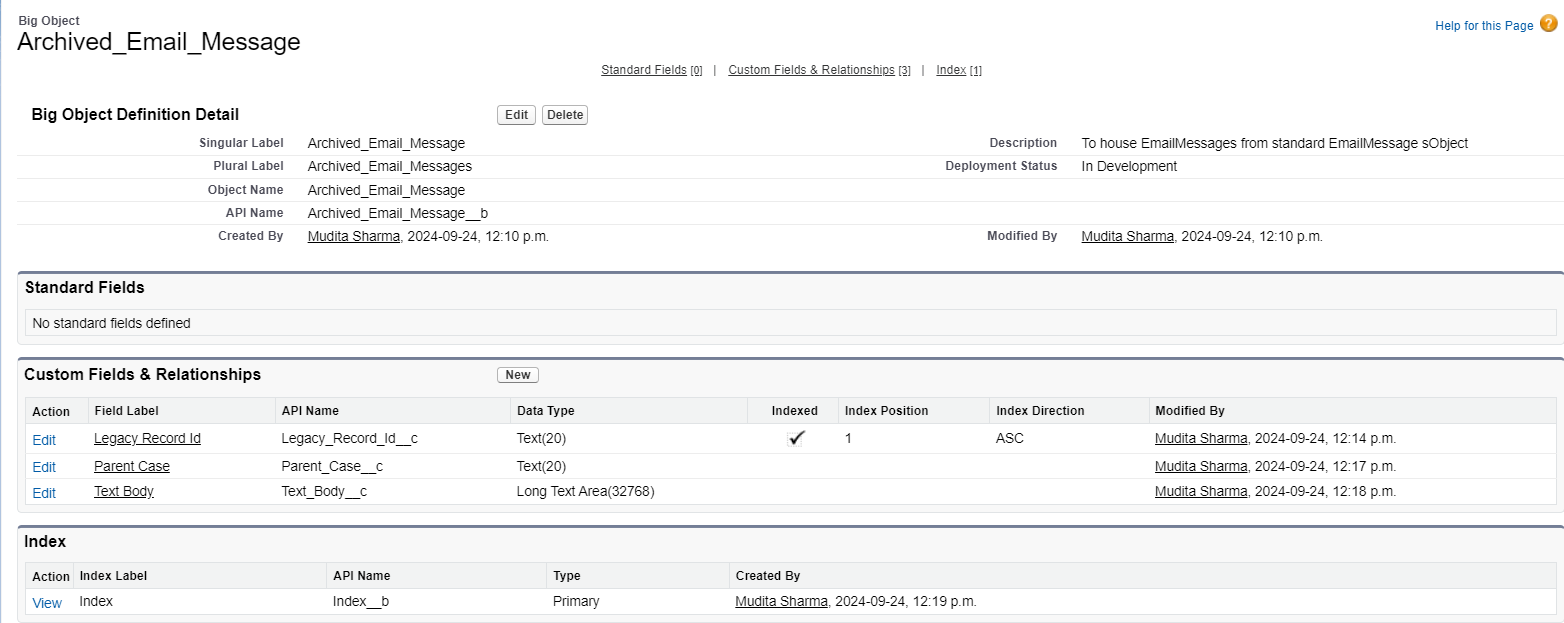

1. Navigate to Setup > Big Objects > New. Define fields and index to store values from the standard EmailMessage sObject.

2. Use data loader to export EmailMessage standard object records that meet your purge criteria. Use the exported CSV file with data to be inserted into your Big Object. Make sure the columns match the fields in the Big Object.

Prepare a CSV file with data to be inserted into your Big Object. Make sure the columns match the fields in the Big Object.

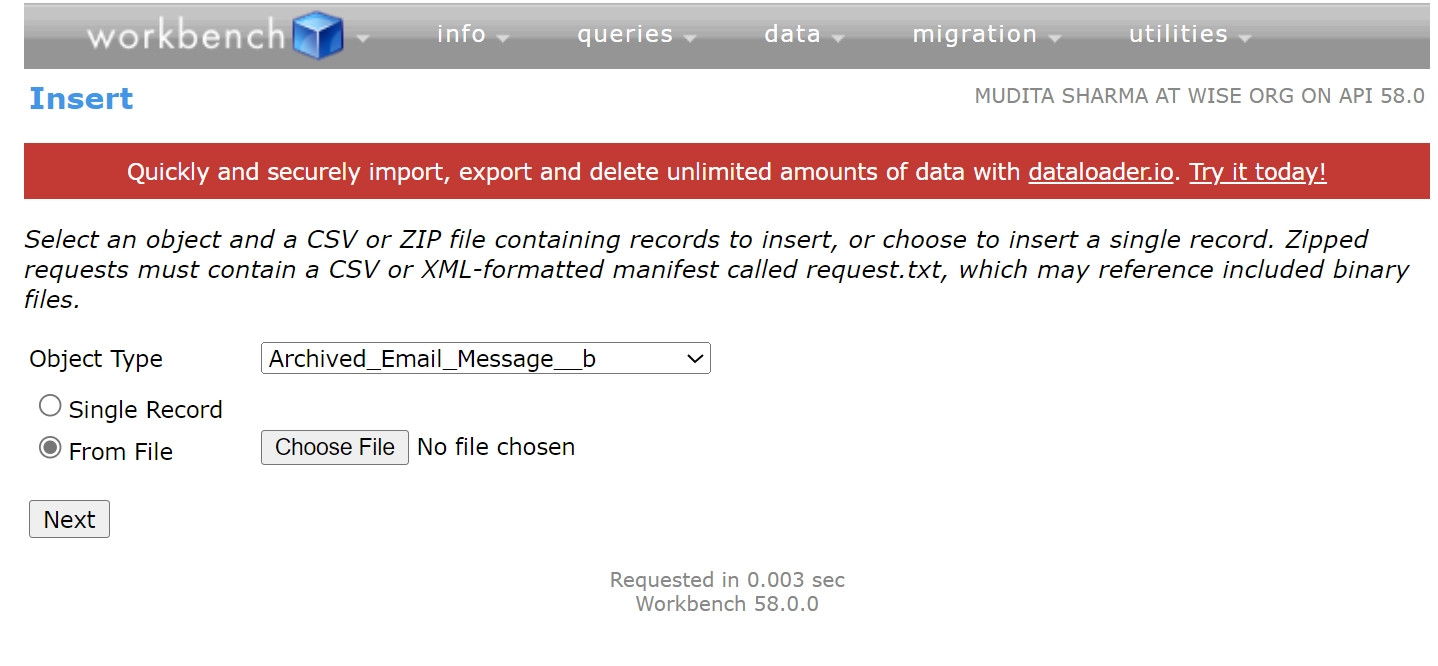

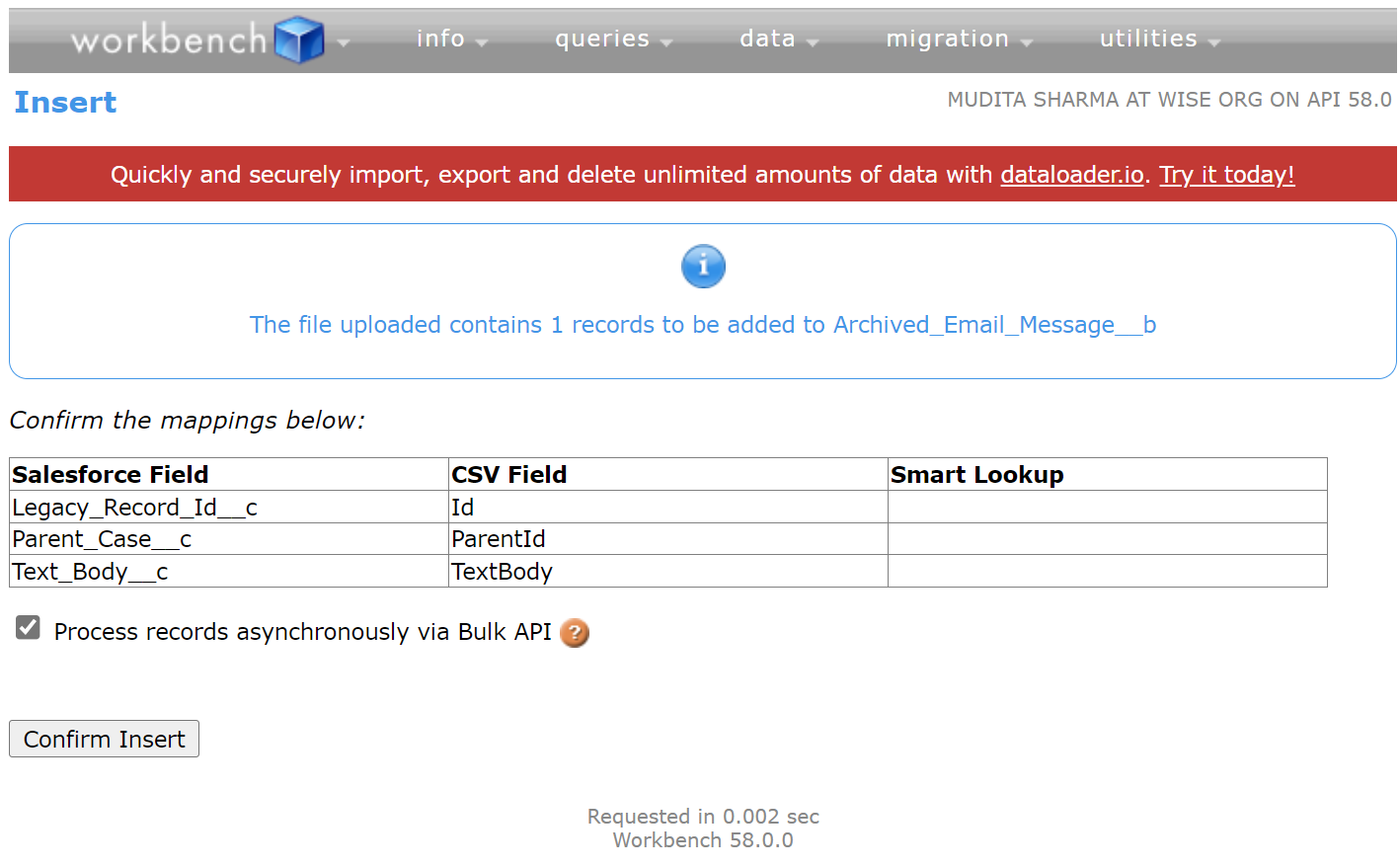

You can use a tool like Salesforce Data Loader, which supports Bulk API. In this example, we are using Workbench as we work through one example record. Log in to workbench for your Salesforce org.

- Select data > Insert.

- Choose your CSV file.

- Map the fields from the CSV to the fields in your Big Object.

- Click Next and then Finish to start the insertion process.

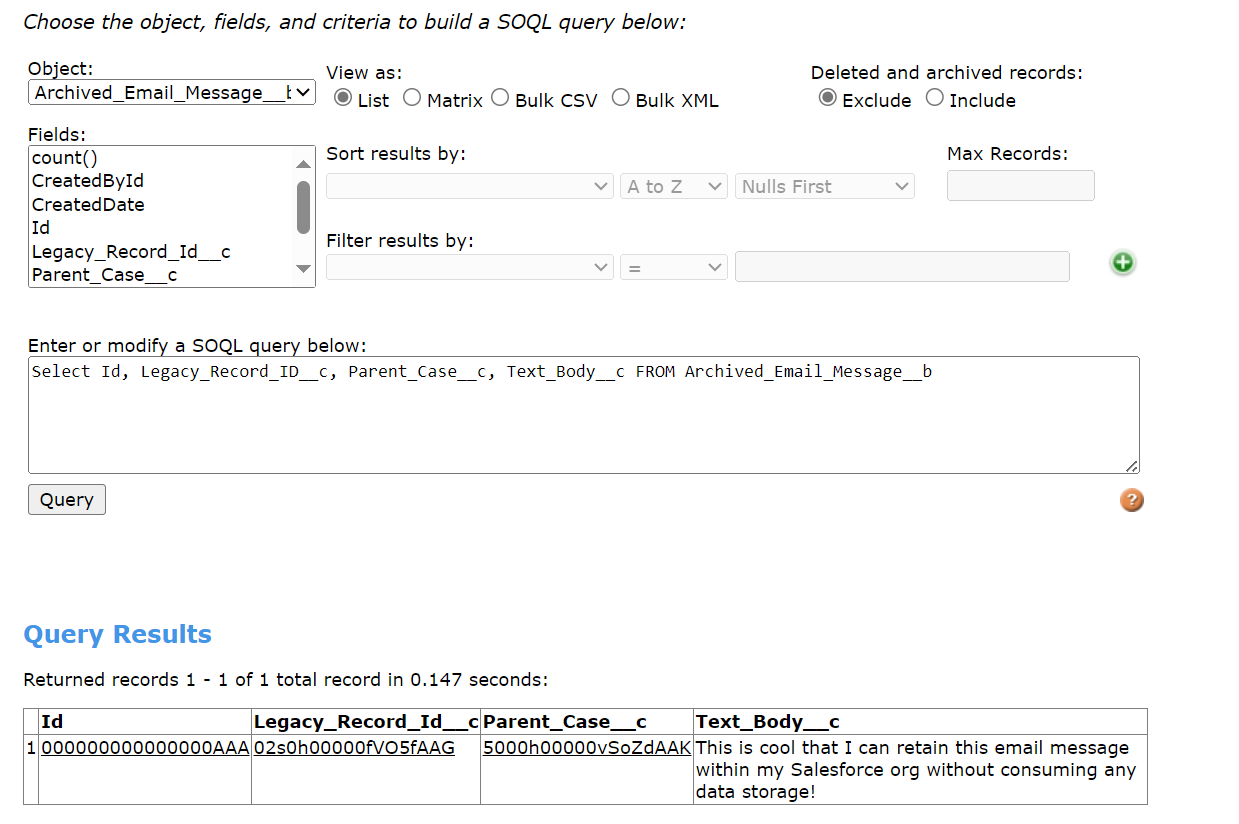

The Big Object record are now available to be queried using SOQL.

For a subset of data that you want to report on, use the Bulk API to write a query that extracts a smaller, representative subset of the data you're interested in. You can store this working dataset in a custom object and use it in reports, dashboards, or any other Lightning Platform feature.

Create a custom object that will hold the working dataset for the Big Object data that you want to report on, with the option to allow reports. Add custom fields to the object that match the fields you want to report on from the Big Object.

Create a SOQL query to build your working dataset by pulling the data from your Big Object into your custom object. To ensure that your working dataset is always up to date for accurate reporting, set this job to run nightly.

Additional Tips

Indexing Fields: Ensure you have defined at least one index field in your Big Object, as it will significantly affect how you query and manipulate the data.

Data Volume: Be mindful of the volume of data you're inserting, as Big Objects are designed to handle large data sets, but inserting vast amounts of data at once might require optimization.

Data Loader Alternatives: Besides Data Loader, you can also leverage tools like Workbench, Salesforce CLI, or third-party ETL tools to interact with Big Objects via the Bulk API.

You now have a few different methods to insert records into Big Objects in Salesforce. Choose the one that best fits your use case and technical comfort level.